Precision in digital analytics is unobtainable. This isn’t an edgy statement, it’s a fact. You will never achieve precision in your Adobe Analytics account. Your A/B test data will always contain noise. If your company is larger than a mom-and-pop shop then your automated QA tools will never be totally comprehensive. As an analyst, you must figure out a way to be effective while not becoming exhausted trying to achieve analytics data precision. Let’s start with a list of noise sources we get out-of-the-box with any analytics tool.

Uncontrollable Noise

- Clever spam bots inflate/distort data

- Ad blockers stop data collection

- People clear their cookies

- Testing site traffic

- Some visitors use proxies

You get the idea. We have no choice but to live with this noise. While this can be mitigated, it cannot be eliminated. Uncontrollable noise has been a nuisance and have gotten worse over the past 5 or 6 years with the spread of ad blockers and sophisticated spam bots. Let’s assume you’ve taken the right steps to minimize this noise. Apart from some basic filters, there isn’t a heck of a lot more you can do to prevent this from affecting your data.

Controllable Noise

- Routine site releases

- Dynamic tagging with CSS selectors

- Legacy tracking code

- Broken site code

- Inconsistent data layer

“Controllable” might not be the best way to put it since you might not really have much immediate control over this stuff. This is often what I spend most of my time on when I QA. They’re the easiest levers to pull.

Thoughts

It’s up to you, but analytics precision can often stop at “good enough”. This means you aren’t burning cycles ensuring every single click tracking event is perfect before every single release and it means you are comfortable delivering data even though you know some testing traffic slipped through the cracks. I make decisions with data that isn’t precise every day. You do, too… as long as you’re confident it’s directionally accurate. What’s important is that you’re making decisions with data. Not enough people are doing that and instead going down an endless rabbit hole of QA. I might be the minority here, but I believe it’s better to occasionally make a wrong decision with data than it is to let precision paranoia scare me into driving fewer decisions.

That said, there are some QA tools that are supposed to make your life easier. When it comes to data quality, there are tools out there that can automate the QA monitoring; but as we shorten our sprint timeboxes and quickly approach continuous integration, maintaining the integrity of our implementation only becomes more cumbersome.

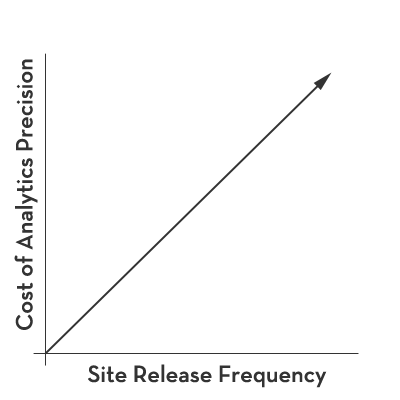

As you can see from this very scientific model, there is a direct relationship between the cost of analytics precision and site release frequency. I’m just using common sense here – something’s gotta give and I can guarantee that they won’t slow down to wait for your click tracking to be perfect. The reason I built the Tagtician web app was to provide QA to users without the overhead of micromanaging simulations and server calls every time the site changed. The philosophy behind the tool directly ties back to the representation in the chart above. Improving the speed and efficiency of your development cycles shouldn’t create that much more work for the analyst. At some point, the value of the analyst is completely undermined by the cost of maintaining the tracking and complex simulations in other QA tools. With or without an automated QA tool, the momentum behind shorter and shorter release cycles is creeping upward.

Is your company moving on the path towards continuous integration? How do you think this trend will affect your job? Do you think it will increase the time you allocate to QA work?